Last month, visual artists across the internet raised their voices against machine learning-based image generators like Dall-E, MidJourney, Imagen, and Stable Diffusion—which generate images by sampling from an enormous dataset of artwork, photographs, and other images sourced from the internet.

This started on a large scale after the visual art portfolio site ArtStation featured an AI-generated piece on its explore page. Artists on the platform flooded its explore page with a graphic captioned “NO TO AI GENERATED IMAGES”.

Artists Are Upset

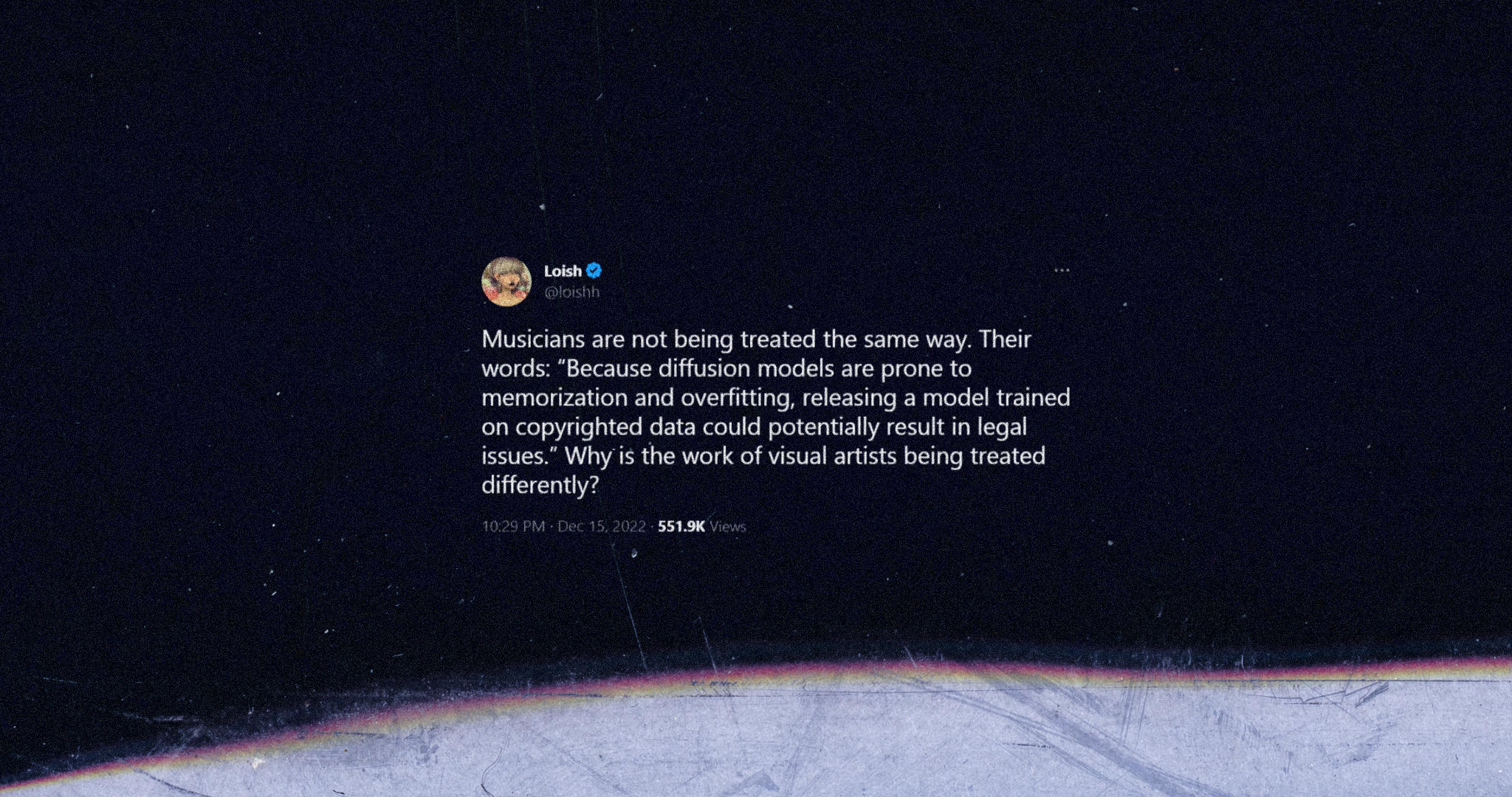

Artists across other platforms, including Loish and Mike Mignola, also expressed their rejection of the exploitative approach from these corporations; who train their software on copyrighted pieces of art without consent from and knowledge of their owners.

About a week ago, Getty Images published a statement announcing their legal proceedings in the High Court of Justice in London against Stability AI for copyright infringement. Getty Images claimed that Stability AI “unlawfully copied and processed millions of images protected by copyright and the associated metadata owned or represented by Getty Images”.

Opt-In By Default

Google’s Imagen and Stability AI’s Stable Diffusion use datasets from LAION, which contain image-text pairs in the form of image URLs and their linked ALT descriptions. Imagen uses the LAION-400M dataset, while Stable Diffusion was trained on LAION-5B—a dataset that Stability AI helped fund the creation of.

Stability AI has announced that people can opt into or out of having their images used in the training of Stable Diffusion V3, by signing up to haveibeentrained.com and performing the steps necessary.

Many are still upset by the fact that Stability AI does not opt people out of having their images used by default.

As of now, ArtStation has updated their terms of service to disallow scraping and redistributing content that lives on their platform (which is the primary method for generating datasets). They have also prohibited the use of content marked ‘NoAI’ with ‘Generative AI Programs’—a directive DeviantArt introduced to communicate such restriction. All posts on ArtStation now carry the ‘NoAI’ meta tag by default.

Why Is This Theft?

While some people are worried about ‘AI’ robots replacing them out of their jobs, many are concerned about the fact that these image generators are only as good as their datasets. Because there is a direct relationship between the datasets these programs ‘learn’ from and the output they are capable of generating, many artists feel that unauthorized use of their work to ‘train’ programs infringes their copyright.

On their website’s FAQ page, LAION says that their datasets “are simply indexes to the internet”—which are URLs to the original images, paired with their ALT text entries. This means that while LAION does not host images without their owners’ consent, they distribute a database containing URLs to access them—using which a user “must reconstruct the images data by downloading the subset they are interested in.”

This is rather interesting; because this might mean that LAION acknowledges the possibility—if not probability—of redistributing content without permission is an act of copyright infringement. It is worth considering whether distributing URLs and ALT text makes LAION the The Pirate Bay for machine learning programs, many of which profit off of software they train on these datasets.

It may also be worth considering what it means to train a program on pre-existing work. Is training an ML program simply a form of data compression, where the algorithm strips away unnecessary data and stores the rest in a new, reusable format?

Throughout this article and everywhere else on the web, you would notice that I tend to use the term ‘machine learning’ to address these programs, instead of the more widely used—and catchier—‘artificial intelligence’. This is because I do not believe that these programs are intelligent. They are very predictable and do not make decisions that are not explicitly programmed. The reason you get seemingly random outputs from these programs is that they build their generations based on pseudo-randomly seeded noise.The output would also be identical if you were to generate a pair of images using identical prompts and seeds. Some machine learning programs let you access and reuse the seed.

The Tech Isn’t Evil, Corporations Are

Machine learning is a method for computers to ‘learn’ algorithms based on sample data. How could something so abstract possibly be responsible for enraging so many craftspeople?

You may have noticed already, but the flaw is not in the technology—it’s in the product.

Machine learning programs that learn from ethically-sourced datasets already exist. An example of this is Google’s Lyra, a speech codec trained “with thousands of hours of audio with speakers in over 70 languages using open-source audio libraries and then verifying the audio quality with expert and crowdsourced listeners.”

As Loish has pointed out, Harmonai’s Dance Diffusion—a machine learning-based music generator also released by Stability AI has trained exclusively on royalty-free music because “releasing a model trained on copyrighted data could potentially result in legal issues.”

More posts like this.